2 Chapter 2 – IT Technology

Learning Objectives

2.1 Describe the evolution and role of information technology hardware.

2.2 Describe the evolution and role of information technology software.

Information technology hardware

Hardware, or information technology hardware, truly began in the early 1900s as mechanical devices and theoretical frameworks. These devices and frameworks, with electricity and technological advances slowly morphed into the recognizable computer hardware that we use today. As hardware has evolved, it has mostly followed one main ‘law’ referred to Moore’s law. Moore’s law states that the number of transistors in a dense integrated circuit doubles in size about every two years. This doubling of transistors in the same space directly and indirectly has brought about a few consistent changes:

- Hardware size has greatly decreased over time.

- Data transfer between devices has become more powerful over time.

- Hardware costs have greatly decreased over time.

These increases and decreases have caused data storage to become affordable, allowing organizations and individuals to store tremendous amounts of data while also allowing that data to be processed in almost real-time. For data, the rule was the data should be worth its cost, however, the data now costs very little to store, and the value of that data may be high in the future. Organizations and individuals can now store almost all data they want to store; however, the costs have shifted from the hardware being prohibitive to the management and security of that data.

The physical parts of computing devices – those that you can actually touch – are referred to as hardware. The opposite of hardware is software, which you cannot physically touch. The difficulty with classifying hardware is that most hardware contains software. Hardware typical users interact with also input and output information – meaning not only are they hardware they are also ‘mini’ information systems as well! These mini systems are commonly referred to as digital devices or devices.

This includes devices such as the following:

- desktop computers

- laptop computers

- mobile phones

- tablet computers

- e-readers

- storage devices, such as flash drives

- input devices, such as keyboards, mice, and scanners

- output devices such as printers and speakers.

Besides these more traditional computer hardware devices, many items that were once not considered digital devices are now becoming computerized themselves. Digital technologies are now being integrated into many everyday objects, so the days of a device being labeled categorically as computer hardware may be ending. Examples of these types of digital devices include automobiles, refrigerators, and even soft-drink dispensers.

Personal computers

A personal computer is designed to be a general-purpose device. That is, it can be used to solve many different types of problems. As the technologies of the personal computer have become more commonplace, many of the components have been integrated into other devices that previously were purely mechanical. We have also seen an evolution in what defines a computer. Ever since the invention of the personal computer, users have clamored for a way to carry them around. Here we will examine several types of devices that represent the latest trends in personal computing.

Portable computers

In 1983, Compaq Computer Corporation developed the first commercially successful portable personal computer. By today’s standards, the Compaq PC was not very portable: weighing in at 28 pounds, this computer was portable only in the most literal sense – it could be carried around. But this was no laptop; the computer was designed like a suitcase, to be lugged around and laid on its side to be used. Besides portability, the Compaq was successful because it was fully compatible with the software being run by the IBM PC, which was the standard for business.

In the years that followed, portable computing continued to improve, giving us laptop and notebook computers. The “luggable” computer has given way to a much lighter clamshell computer that weighs from 4 to 6 pounds and runs on batteries. In fact, the most recent advances in technology give us a new class of laptop that is quickly becoming the standard: these laptops are extremely light and portable and use less power than their larger counterparts. The MacBook Air is a good example of this: it weighs less than three pounds and is only 0.68 inches thick!

Finally, as more and more organizations and individuals are moving much of their computing to the Internet, laptops are being developed that use “the cloud” for all of their data and application storage. These laptops are also extremely light because they have no need of a hard disk at all! A good example of this type of laptop (sometimes called a netbook) is Samsung’s Chromebook.

Smartphones

The first modern-day mobile phone was invented in 1973. Resembling a brick and weighing in at two pounds, it was priced out of reach for most consumers at nearly four thousand dollars. Since then, mobile phones have become smaller and less expensive; today mobile phones are a modern convenience available to all levels of society. As mobile phones evolved, they became more like small computers. These smartphones have many of the same characteristics as a personal computer, such as an operating system and memory. The first smartphone was the IBM Simon, introduced in 1994.

In January of 2007, Apple introduced the iPhone. Its ease of use and intuitive interface made it an immediate success and solidified the future of smartphones. Running on an operating system called iOS, the iPhone was really a small computer with a touch-screen interface. In 2008, the first Android phone was released, with similar functionality.

Tablet computers

A tablet computer is one that uses a touch screen as its primary input and is small enough and light enough to be carried around easily. They generally have no keyboard and are self-contained inside a rectangular case. The first tablet computers appeared in the early 2000s and used an attached pen as a writing device for input. These tablets ranged in size from small personal digital assistants (PDAs), which were handheld, to full-sized, 14-inch devices. Most early tablets used a version of an existing computer operating system, such as Windows or Linux.

These early tablet devices were, for the most part, commercial failures. In January 2010, Apple introduced the iPad, which ushered in a new era of tablet computing. Instead of a pen, the iPad used the finger as the primary input device. Instead of using the operating system of their desktop and laptop computers, Apple chose to use iOS, the operating system of the iPhone. Because the iPad had a user interface that was the same as the iPhone, consumers felt comfortable, and sales took off. The iPad has set the standard for tablet computing. After the success of the iPad, computer manufacturers began to develop new tablets that utilized operating systems that were designed for mobile devices, such as Android.

The commoditization of the personal computer

Over the past thirty years, as the personal computer has gone from technical marvel to part of our everyday lives, it has also become a commodity. The PC has become a commodity in the sense that there is very little differentiation between computers, and the primary factor that controls their sale is their price. Hundreds of manufacturers all over the world now create parts for personal computers. Dozens of companies buy these parts and assemble the computers. As commodities, there are essentially no differences between computers made by these different companies. Profit margins for personal computers are razor-thin, leading hardware developers to find the lowest-cost manufacturing.

There is one brand of computer for which this is not the case – Apple. Because Apple does not make computers that run on the same open standards as other manufacturers, they can make a unique product that no one can easily copy. By creating what many consider to be a superior product, Apple can charge more for their computers than other manufacturers. Just as with the iPad and iPhone, Apple has chosen a strategy of differentiation, which, at least at this time, seems to be paying off.

Information technology software

The second component of an information system is software. Simply put: Software is the set of instructions that tell the hardware what to do. Software is created through the process of programming. Without software, the hardware would not be functional.

Types of software

Software can be broadly divided into two categories: operating systems and application software. Operating systems manage the hardware and create the interface between the hardware and the user. Application software is the category of programs that do something useful for the user.

Operating systems

The operating system provides several essential functions, including:

1. managing the hardware resources of the computer,

2. providing the user-interface components,

3. providing a platform for software developers to write applications.

All computing devices run an operating system. For personal computers, the most popular operating systems are Microsoft’s Windows, Apple’s OS X, and different versions of Linux. Smartphones and tablets run operating systems as well, such as Apple’s iOS, Google’s Android, Microsoft’s Windows Mobile, and Blackberry.

Early personal-computer operating systems were simple by today’s standards; they did not provide multitasking and required the user to type commands to initiate an action. The amount of memory that early operating systems could handle was limited as well, making large programs impractical to run. The most popular of the early operating systems was IBM’s Disk Operating System, or DOS, which was developed for them by Microsoft.

In 1984, Apple introduced the Macintosh computer, featuring an operating system with a graphical user interface. Though not the first graphical operating system, it was the first one to find commercial success. In 1985, Microsoft released the first version of Windows. This version of Windows was not an operating system, but instead was an application that ran on top of the DOS operating system, providing a graphical environment. It was quite limited and had little commercial success. It was not until the 1990 release of Windows 3.0 that Microsoft found success with a graphical user interface. Because of the hold of IBM and IBM-compatible personal computers on business, it was not until Windows 3.0 was released that business users began using a graphical user interface, ushering us into the graphical-computing era. Since 1990, both Apple and Microsoft have released many new versions of their operating systems, with each release adding the ability to process more data at once and access more memory. Features such as multitasking, virtual memory, and voice input have become standard features of both operating systems.

A third personal-computer operating system family that is gaining in popularity is Linux (pronounced “linn-ex”). Linux is a version of the Unix operating system that runs on the personal computer. Unix is an operating system used primarily by scientists and engineers on larger minicomputers. These are very expensive computers, and software developer Linus Torvalds wanted to find a way to make Unix run on less expensive personal computers. Linux was the result. Linux has many variations and now powers a large percentage of web servers in the world. It is also an example of open-source software, a topic we will cover later in this chapter.

Application software

The second major category of software is application software. Application software is, essentially, software that allows the user to accomplish some goal or purpose. For example, if you must write a paper, you might use the application-software program Microsoft Word. If you want to listen to music, you might use iTunes. To surf the web, you might use Internet Explorer or Firefox. Even a computer game could be considered application software.

Productivity software

When a new type of digital device is invented, there are generally a small group of technology enthusiasts who will purchase it just for the joy of figuring out how it works. However, for most of us, until a device can actually do something useful, we are not going to spend our hard-earned money on it. A “killer” application is one that becomes so essential that large numbers of people will buy a device just to run that application. For the personal computer, the killer application was the spreadsheet. In 1979, VisiCalc, the first personal-computer spreadsheet package, was introduced. It was an immediate hit and drove sales of the Apple II. It also solidified the value of the personal computer beyond the relatively small circle of technology geeks. When the IBM PC was released, another spreadsheet program, Lotus 1-2-3, was the killer app for business.

Along with the spreadsheet, several other software applications have become standard tools for the workplace. These applications, called productivity software, allow office employees to complete their daily work. Many times, these applications come packaged together, such as in Microsoft’s Office suite. Here is a list of these applications and their basic functions:

• Word processing: This class of software provides for the creation of written documents. Functions include the ability to type and edit text, format fonts and paragraphs, and add, move, and delete text throughout the document. Most modern word-processing programs also have the ability to add tables, images, and various layout and formatting features to the document. Word processors save their documents as electronic files in a variety of formats. By far, the most popular word- processing package is Microsoft Word, which saves its files in the DOCX format. This format can be read/written by many other word-processor packages.

• Spreadsheet: This class of software provides a way to do numeric calculations and analysis. The working area is divided into rows and columns, where users can enter numbers, text, or formulas. It is the formulas that make a spreadsheet powerful, allowing the user to develop complex calculations that can change based on the numbers entered. Most spreadsheets also include the ability to create charts based on the data entered. The most popular spreadsheet package is Microsoft Excel, which saves its files in the XLSX format. Just as with word processors, many other spreadsheet packages can read and write to this file format.

• Presentation: This class of software provides for the creation of slideshow presentations.

Harkening back to the days of overhead projectors and transparencies, presentation software allows its users to create a set of slides that can be printed or projected on a screen. Users can add text, images, and other media elements to the slides. Microsoft’s PowerPoint is the most popular software right now, saving its files in PPTX format.

• Some office suites include other types of software. For example, Microsoft Office includes Outlook, its e-mail package, and OneNote, an information-gathering collaboration tool. The professional version of Office also includes Microsoft Access, a database package.

Microsoft popularized the idea of the office-software productivity bundle with their release of Microsoft Office. This package continues to dominate the market and most businesses expect employees to know how to use this software. However, many competitors to Microsoft Office do exist and are compatible with the file formats used by Microsoft (see table below). Recently, Microsoft has begun to offer a web version of their Office suite. Similar to Google Drive, this suite allows users to edit and share documents online utilizing cloud-computing technology. Cloud computing will be discussed later in this chapter.

Utility software and programming software

Two subcategories of application software worth mentioning are utility software and programming software. Utility software includes software that allows you to fix or modify your computer in some way. Examples include antivirus software and disk defragmentation software. These types of software packages were invented to fill shortcomings in operating systems. Many times, a subsequent release of an operating system will include these utility functions as part of the operating system itself.

Programming software is software whose purpose is to make more software. Most of these programs provide programmers with an environment in which they can write the code, test it, and convert it into the format that can then be run on a computer.

Mobile applications

Just as with the personal computer, mobile devices such as tablet computers and smartphones also have operating systems and application software. In fact, these mobile devices are in many ways just smaller versions of personal computers. A mobile app is a software application programmed to run specifically on a mobile device.

Smartphones and tablets are becoming a dominant form of computing, with many more smartphones being sold than personal computers. This means that organizations will have to get smart about developing software on mobile devices in order to stay relevant.

As organizations consider making their digital presence compatible with mobile devices, they will have to decide whether to build a mobile app. A mobile app is an expensive proposition, and it will only run on one type of mobile device at a time. For example, if an organization creates an iPhone app, those with Android phones cannot run the application. Each app takes several thousand dollars to create, so this is not a trivial decision for many companies.

One option many companies have is to create a website that is mobile-friendly. A mobile website works on all mobile devices and costs about the same as creating an app.

Cloud computing

Historically, for software to run on a computer, an individual copy of the software had to be installed on the computer, either from a disk or, more recently, after being downloaded from the Internet. The concept of “cloud” computing changes this, however.

To understand cloud computing, we first have to understand what the cloud is. “The cloud” refers to applications, services, and data storage on the Internet. These service providers rely on giant server farms and massive storage devices that are connected via Internet protocols. Cloud computing is the use of these services by individuals and organizations.

You probably already use cloud computing in some forms. For example, if you access your e-mail via your web browser, you are using a form of cloud computing. If you use Google Drive’s applications, you are using cloud computing. While these are free versions of cloud computing, there is big business in providing applications and data storage over the web. Salesforce (see above) is a good example of cloud computing – their entire suite of CRM applications is offered via the cloud. Cloud computing is not limited to web applications: it can also be used for services such as phone or video streaming.

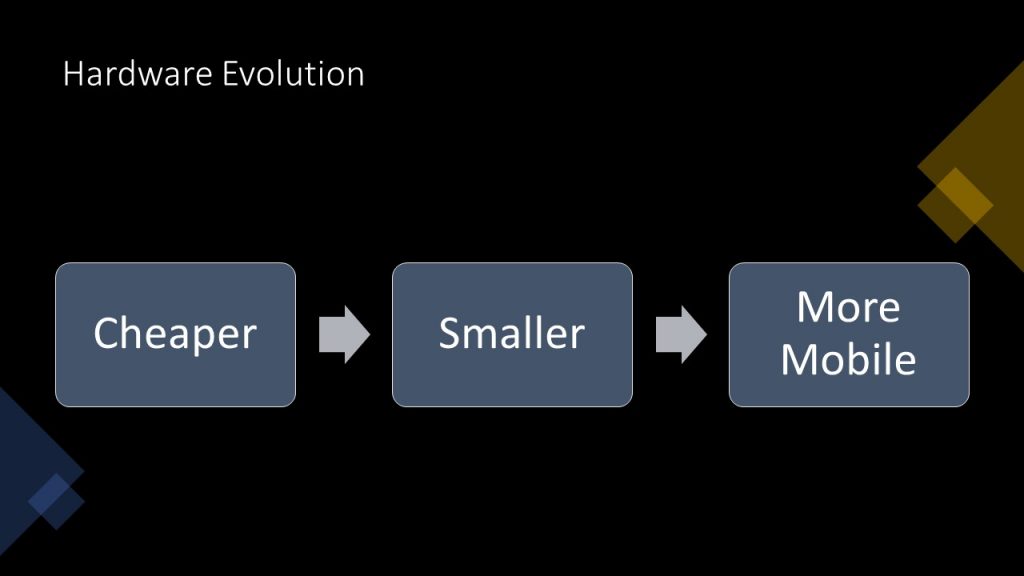

Advantages of Cloud Computing

• No software to install or upgrades to maintain.

• Available from any computer that has access to the Internet.

• Can scale to a large number of users easily.

• New applications can be up and running very quickly.

• Services can be leased for a limited time on an as-needed basis.

• Your information is not lost if your hard disk crashes or your laptop is stolen.

• You are not limited by the available memory or disk space on your computer.

Disadvantages of Cloud Computing

• Your information is stored on someone else’s computer – how safe is it?

• You must have Internet access to use it. If you do not have access, you’re out of luck.

• You are relying on a third-party to provide these services

Cloud computing has the ability to really impact how organizations manage technology. For example, why is an IT department needed to purchase, configure, and manage personal computers and software when all that is really needed is an Internet connection?

Using a private cloud

Many organizations are understandably nervous about giving up control of their data and some of their applications by using cloud computing. But they also see the value in reducing the need for installing software and adding disk storage to local computers. A solution to this problem lies in the concept of a private cloud. While there are various models of a private cloud, the basic idea is for the cloud service provider to section off web server space for a specific organization. The organization has full control over that server space while still gaining some of the benefits of cloud computing.

Virtualization

One technology that is utilized extensively as part of cloud computing is “virtualization.” Virtualization is the process of using software to simulate a computer or some other device. For example, using virtualization, a single computer can perform the functions of several computers. Companies such as EMC provide virtualization software that allows cloud service providers to provision web servers to their clients quickly and efficiently. Organizations are also implementing virtualization in order to reduce the number of servers needed to provide the necessary services.

Software creation

How is software created? If software is the set of instructions that tells the hardware what to do, how are these instructions written? If a computer reads everything as ones and zeroes, do we have to learn how to write software that way?

Modern software applications are written using a programming language. A programming language consists of a set of commands and syntax that can be organized logically to execute specific functions. This language generally consists of a set of readable words combined with symbols. Using this language, a programmer writes a program (called the source code) that can then be compiled into machine-readable form, the ones and zeroes necessary to be executed by the CPU. Examples of well-known programming languages today include Java, PHP, and various flavors of C (Visual C, C++, C#). Languages such as HTML and JavaScript are used to develop web pages. Most of the time, programming is done inside a programming environment; when you purchase a copy of Visual Studio from Microsoft, it provides you with an editor, compiler, and help for many of Microsoft’s programming languages.

Software programming was originally an individual process, with each programmer working on an entire program, or several programmers each working on a portion of a larger program. However, newer methods of software development include a more collaborative approach, with teams of programmers working on code together.

When the personal computer was first released, it did not serve any practical need. Early computers were difficult to program and required great attention to detail. However, many personal-computer enthusiasts immediately banded together to build applications and solve problems. These computer enthusiasts were happy to share any programs they built and solutions to problems they found; this collaboration enabled them to innovate and fix problems quickly.

As software began to become a business, however, this idea of sharing everything fell out of favor, at least with some. When a software program takes hundreds of man-hours to develop, it is understandable that the programmers do not want to just give it away. This led to a new business model of restrictive software licensing, which required payment for software, a model that is still dominant today. This model is sometimes referred to as closed source, as the source code is not made available to others.

There are many, however, who feel that software should not be restricted. Just as with those early hobbyists in the 1970s, they feel that innovation and progress can be made much more rapidly if we share what we learn. In the 1990s, with Internet access connecting more and more people together, the open- source movement gained steam.

Open-source software is software that makes the source code available for anyone to copy and use. For most of us, having access to the source code of a program does us little good, as we are not programmers and won’t be able to do much with it. The good news is that open-source software is also available in a compiled format that we can simply download and install. The open-source movement has led to the development of some of the most-used software in the world, including the Firefox browser, the Linux operating system, and the Apache web server. Many also think open-source software is superior to closed- source software. Because the source code is freely available, many programmers have contributed to open- source software projects, adding features and fixing bugs.

Many businesses are wary of open-source software precisely because the code is available for anyone to see. They feel that this increases the risk of an attack. Others counter that this openness decreases the risk because the code is exposed to thousands of programmers who can incorporate code changes to quickly patch vulnerabilities.

There are many arguments on both sides of the aisle for the benefits of the two models. Some benefits of the open-source model are:

• The software is available for free.

• The software source-code is available; it can be examined and reviewed before it is installed.

• The large community of programmers who work on open-source projects leads to quick bug- fixing and feature additions.

Some benefits of the closed-source model are:

• By providing financial incentive for software development, some of the brightest minds have chosen software development as a career.

• Technical support from the company that developed the software.

Today there are thousands of open-source software applications available for download. For example, as we discussed previously in this chapter, you can get the productivity suite from Open Office. One good place to search for open-source software is sourceforge.net, where thousands of software applications are available for free download.

Chapter 2 Assignment

This assignment is designed to expose you to the journey and evolution of information technology.

Create a timeline of major events that have occurred within information technology. *Note a timeline is a visual and not simply an outline.

Steps/Questions

- Using the Internet as a resource, you need to create a timeline of major events that include the following:

- Major IT event for each decade: 1960s, 1970s, 1980s, 1990s, 2000s, 2010s, 2020s.

- For 2020s you need to list what you think will happen during this decade.

- For all other decades you need to list the event and describe the importance of the event.

- Each decade should have one major event.

- Next, add these major Innovations to your timeline. Include when they began and when you believe they reached maturity (when they peaked in use, etc. – define maturity for you).

- Smart Phones

- PCs

- Wearables

- Applications

- Social Media

- The timeline can be of any design of your choosing but should be clean looking and professional as possible. *Tip: Use PowerPoint!

- Major IT event for each decade: 1960s, 1970s, 1980s, 1990s, 2000s, 2010s, 2020s.

Grading

A satisfactory submission will have a complete timeline.

A beyond satisfactory submission will likely have an organized, appealing timeline with relevant events and a complete description of the listed technologies. Additional data/references may be used to support opinion-based responses.